Jun 2025

Notes on Aesthetic Constant

After releasing Constant, I struggled with my decision to put out a work with such an austere aesthetic expression. I joked to my partner that I would redo the project with more regard to its visual appeal and release a new version in a few months.

A year later, Aesthetic Constant is ready for release. The didactic title also holds a more complex question: what visual elements hold our attention?

Aesthetic Constant does not reach the visual complexity of a Kim Asendorf piece, which the market reward for its complexity and dynamism, but answers this question with the complexity of natural motion.

As in Natural Static, the motion data in Aesthetic Constant was captured from the real world. Whereas Natural Static is fueled by videos of flowing water, the data set of Aesthetic Constant is much more compact. To generate it, I filmed friends in Montreal walking on a treadmill, isolated one walk cycle from that video, and ran pose detection machine learning on those frames. The resulting dataset is small enough to store on the blockchain, but preserves the unique character of each walker.

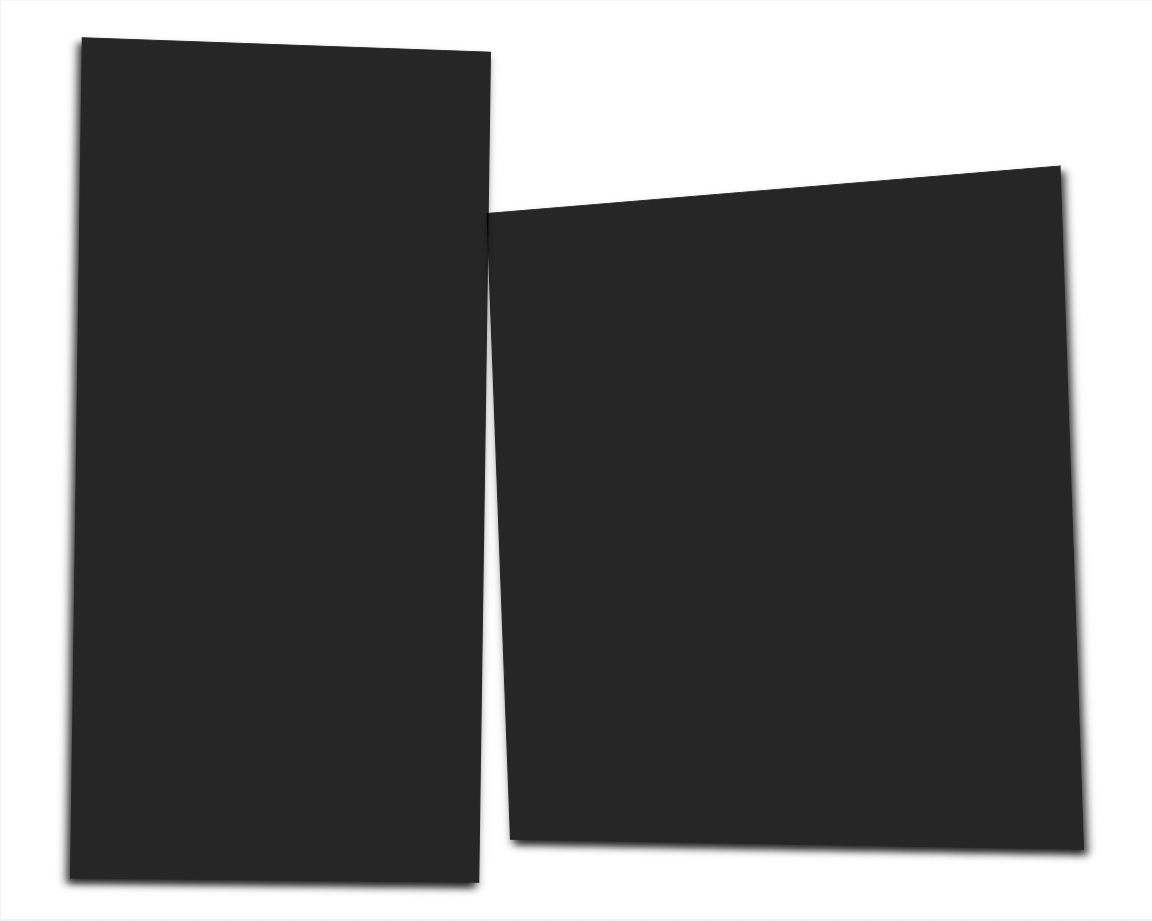

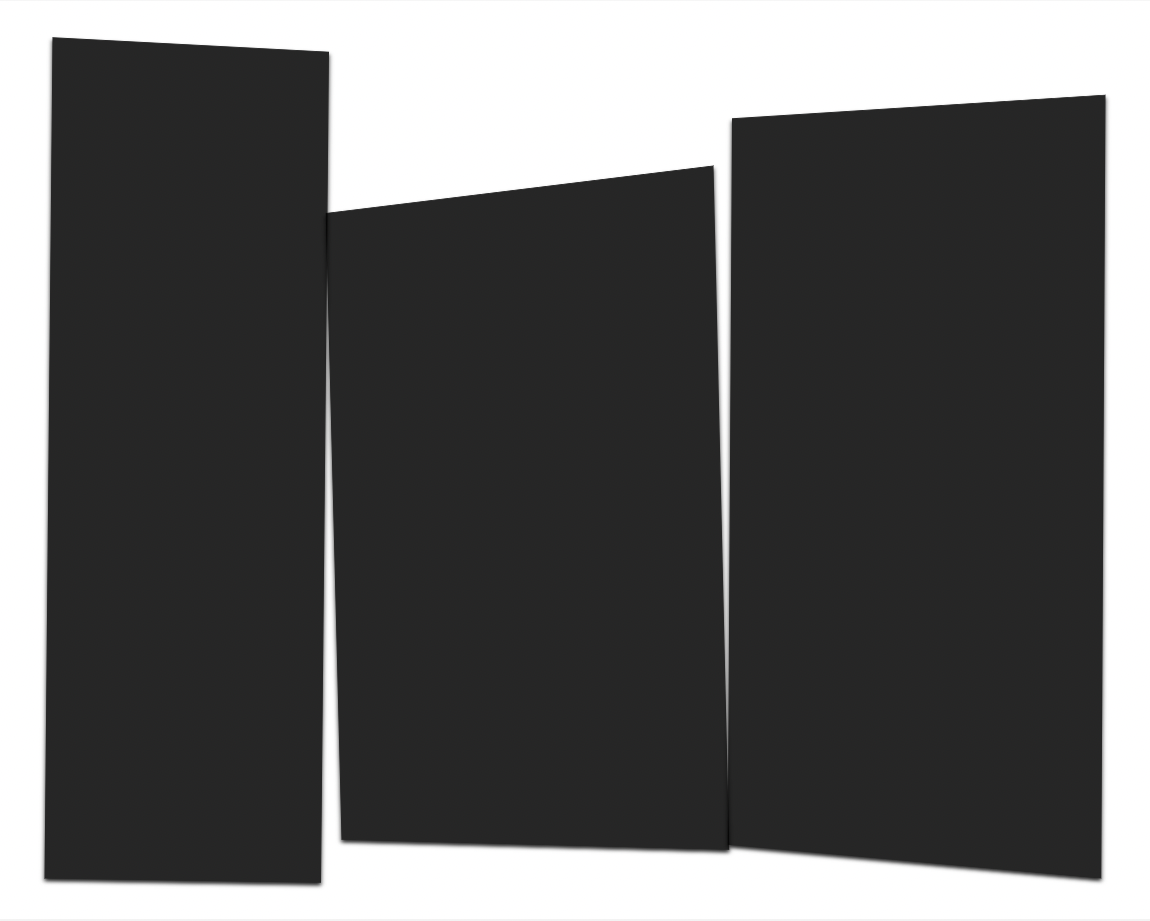

With Constant, my aim was to express that we are in relation to each other when we interact on the blockchain. Initially, Constant was going to be just two blocks, and each transfer of the work would reconfigure the blocks depending on the wallet addresses of buyer and seller. As I got close to release, I realized the changes were so small and might become lost, so I instead added one block with each transfer, the work becomes a visual record of the people its passed through.

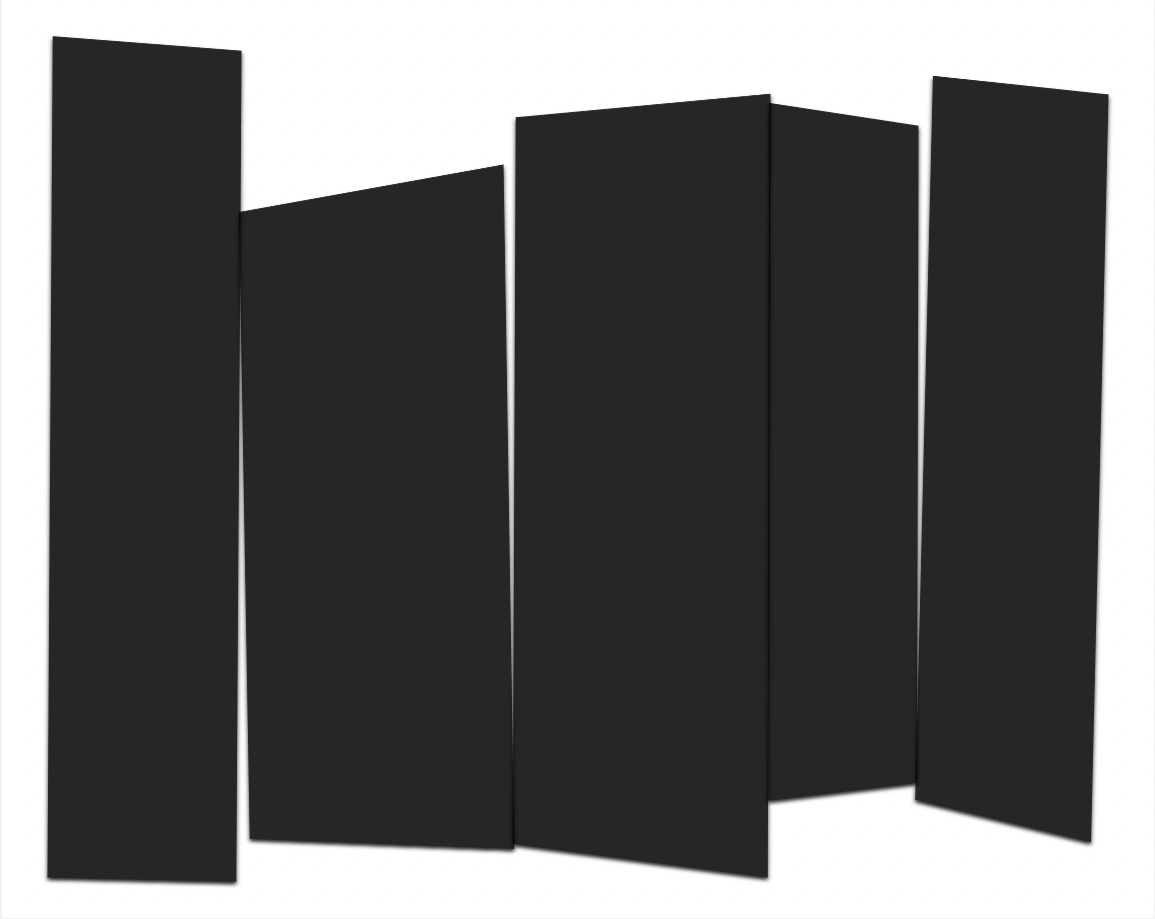

Seeing how participants explored this idea was immensely satisfying. Collectors orchestrated the transfer of the token across multiple wallets, or bounced them between two owned wallets, creating relational compositions. CSA_2D7 took the work to its furthest imaginable extent, initiating the transfer of Constant 11, which currently sits at 148 transfers.

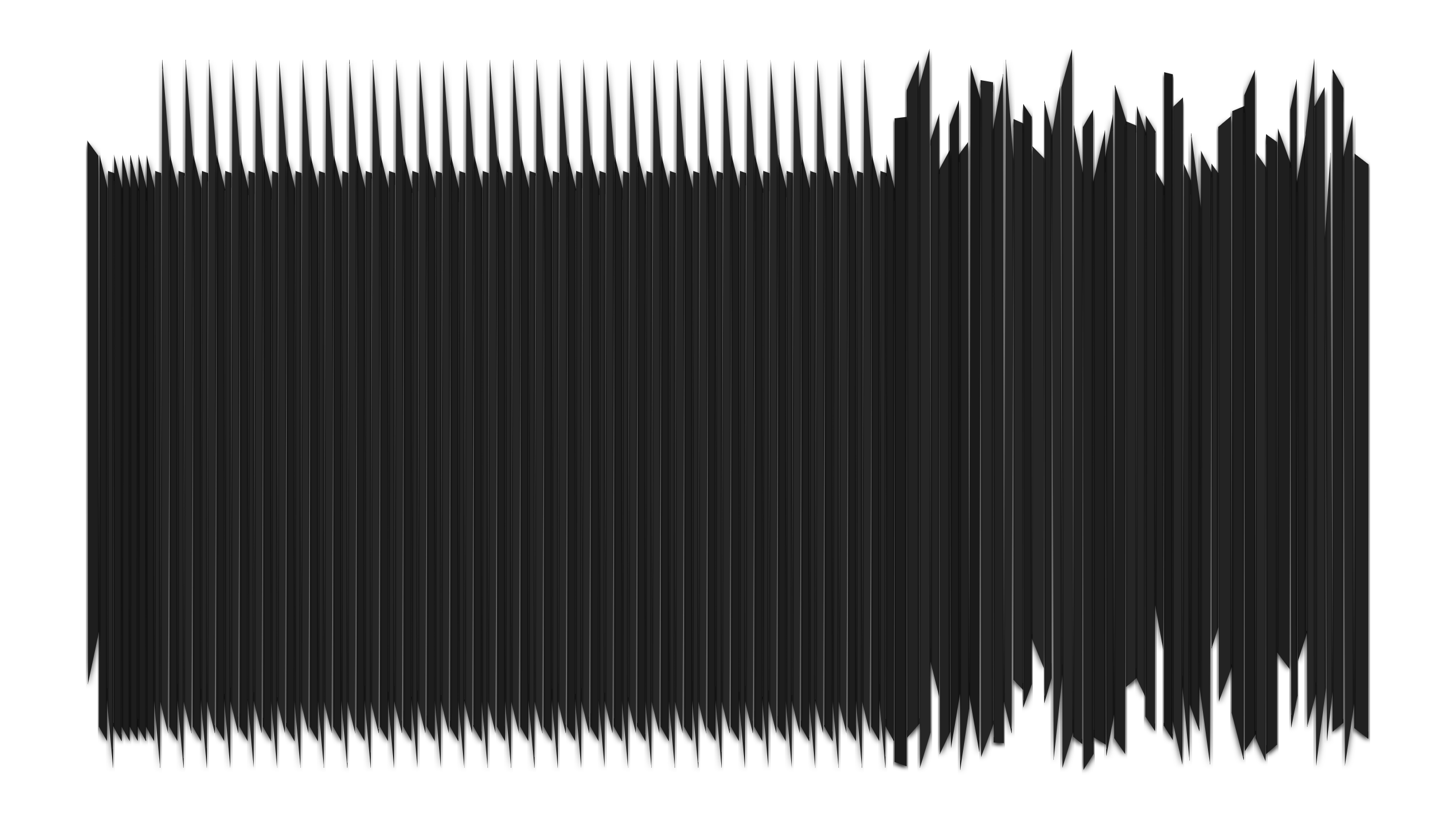

![]()

The journey of Constant 11 inspired the private mint mechanic for Aesthetic Constant. Keeping the mint private meant that I could also add an invite mechanic, allowing minters to invite other minters, and collectively control the edition size.

The reward for inviting a minter is half the mint fee of 0.069 ETH. This reward is integral to the piece, as it draws a tension against the saccharine gesture of connection, and asks if we’re here for the community or for the financial reward.

The appearance of each work is generated from the wallet addresses of the inviter and invitee. The render mode is selected by combining the two addresses, and the gait of each figure is derived from the individual wallet addresses of inviter and invitee. If a token is transferred to a new wallet, or sold on the secondary market, the transfer will result in a new appearance, new wallets generating new outputs.

![]()

![]()

The works are generated by a series of on-chain render contracts, and are animated by gait information that is also stored on-chain. Working on-chain imposes limitations. Contracts have a file size limit, and render operations have a gas limit. I find it an interesting challenge to see what work can be created within these limits, and how these limits can be stretched. For this project, I deployed three separate render contracts, a contract for combining and storing the gait data, alongside the mint contract.

Working with SVG also imposes its own limits. Certain operations are easy to produce, while others remain difficult. The aesthetics of this project are informed by the limits of both the Ethereum blockchain and the SVG standard. As Rafael Rozendaal says, ‘Art is the encounter of a person and a material. The person has intentions and wishes, and the material has intentions and wishes.’

The material of blockchain is not just a settlement layer for the exchange of images. It is a rich relational material, where the aesthetic component of a work is just one element in the communo-social dynamics of a release, and through which deeper questions of community and connection can be asked. Are you my friend, or my exit liquidity? Are we mutually exploiting each other, or are we bound together with a genuine excitement for a new artistic movement that will come to define future generations of practice?

aestheticconstant.jonathanchomko.com

After releasing Constant, I struggled with my decision to put out a work with such an austere aesthetic expression. I joked to my partner that I would redo the project with more regard to its visual appeal and release a new version in a few months.

A year later, Aesthetic Constant is ready for release. The didactic title also holds a more complex question: what visual elements hold our attention?

Aesthetic Constant does not reach the visual complexity of a Kim Asendorf piece, which the market reward for its complexity and dynamism, but answers this question with the complexity of natural motion.

As in Natural Static, the motion data in Aesthetic Constant was captured from the real world. Whereas Natural Static is fueled by videos of flowing water, the data set of Aesthetic Constant is much more compact. To generate it, I filmed friends in Montreal walking on a treadmill, isolated one walk cycle from that video, and ran pose detection machine learning on those frames. The resulting dataset is small enough to store on the blockchain, but preserves the unique character of each walker.

With Constant, my aim was to express that we are in relation to each other when we interact on the blockchain. Initially, Constant was going to be just two blocks, and each transfer of the work would reconfigure the blocks depending on the wallet addresses of buyer and seller. As I got close to release, I realized the changes were so small and might become lost, so I instead added one block with each transfer, the work becomes a visual record of the people its passed through.

Seeing how participants explored this idea was immensely satisfying. Collectors orchestrated the transfer of the token across multiple wallets, or bounced them between two owned wallets, creating relational compositions. CSA_2D7 took the work to its furthest imaginable extent, initiating the transfer of Constant 11, which currently sits at 148 transfers.

The journey of Constant 11 inspired the private mint mechanic for Aesthetic Constant. Keeping the mint private meant that I could also add an invite mechanic, allowing minters to invite other minters, and collectively control the edition size.

The reward for inviting a minter is half the mint fee of 0.069 ETH. This reward is integral to the piece, as it draws a tension against the saccharine gesture of connection, and asks if we’re here for the community or for the financial reward.

The appearance of each work is generated from the wallet addresses of the inviter and invitee. The render mode is selected by combining the two addresses, and the gait of each figure is derived from the individual wallet addresses of inviter and invitee. If a token is transferred to a new wallet, or sold on the secondary market, the transfer will result in a new appearance, new wallets generating new outputs.

The works are generated by a series of on-chain render contracts, and are animated by gait information that is also stored on-chain. Working on-chain imposes limitations. Contracts have a file size limit, and render operations have a gas limit. I find it an interesting challenge to see what work can be created within these limits, and how these limits can be stretched. For this project, I deployed three separate render contracts, a contract for combining and storing the gait data, alongside the mint contract.

Working with SVG also imposes its own limits. Certain operations are easy to produce, while others remain difficult. The aesthetics of this project are informed by the limits of both the Ethereum blockchain and the SVG standard. As Rafael Rozendaal says, ‘Art is the encounter of a person and a material. The person has intentions and wishes, and the material has intentions and wishes.’

The material of blockchain is not just a settlement layer for the exchange of images. It is a rich relational material, where the aesthetic component of a work is just one element in the communo-social dynamics of a release, and through which deeper questions of community and connection can be asked. Are you my friend, or my exit liquidity? Are we mutually exploiting each other, or are we bound together with a genuine excitement for a new artistic movement that will come to define future generations of practice?

aestheticconstant.jonathanchomko.com

May 2025

Aesthetic Constant

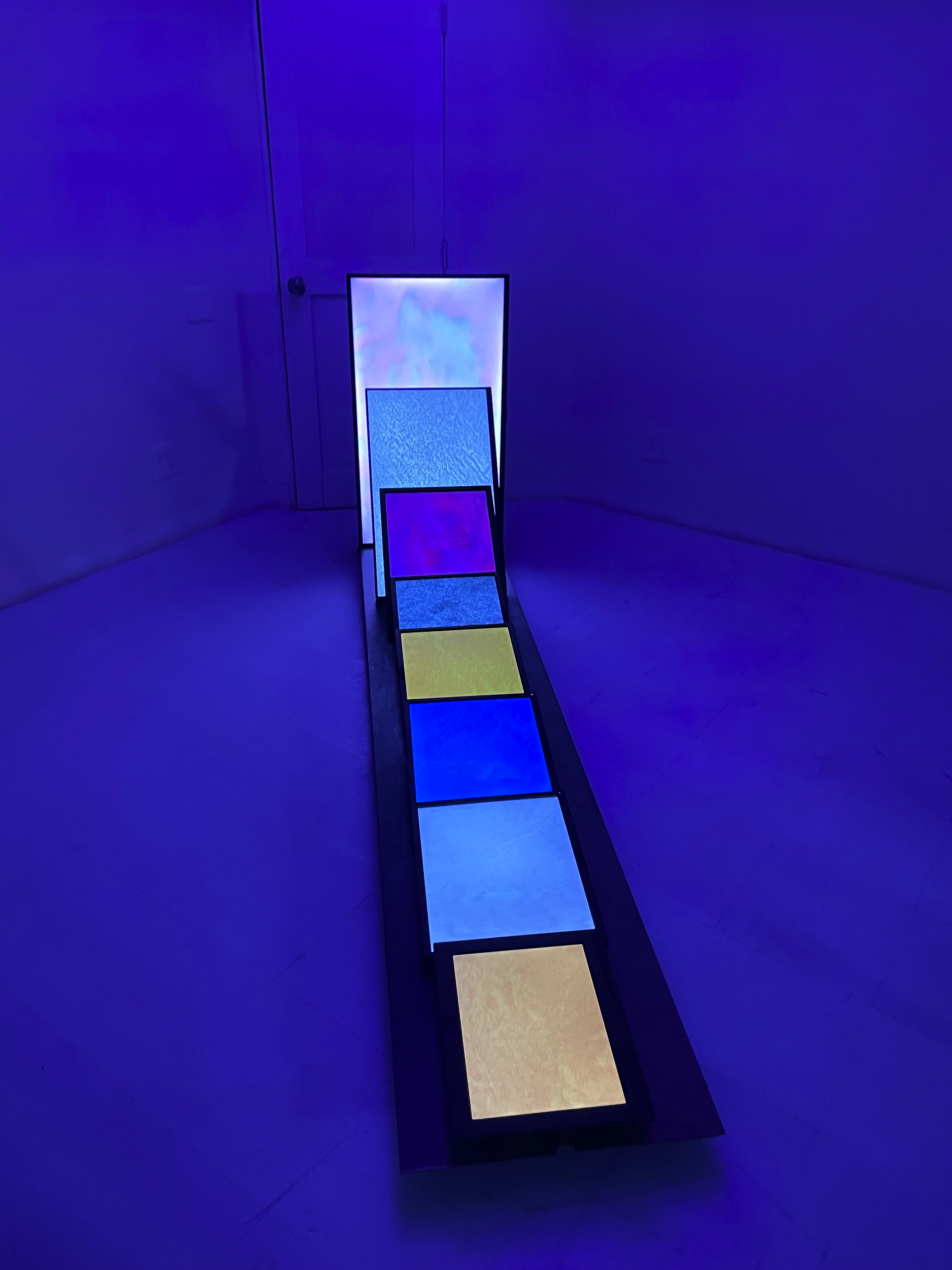

Pareidolia means “false image” in Greek, and it refers to the phenomenon of seeing faces where there aren’t any, in clouds or rocks. The human mind is so eager to recognize the other that it makes faces from nothing. The animations in Jonathan Chomko’s collection Aesthetic Constant are abstractions that play with pattern recognition, though not the kind that evokes the face. The dots that bounce and bob in these animations are based on motion-capture footage that he took of friends walking on a treadmill in his studio. It's pareidolia for the body in motion. These dancing dots are minimal traces of Chomko’s friends, and yet we can recognize them instantly as a document of human movement.

![]()

![]()

In his work with performance, interactive installations, and digital art, Chomko devises systems that viewers can act within or choreographing a series of actions that make the system legible. The most immediate precedent for Aesthetic Constant is Constant (2024), a collection of NFTs that evolve as they are transferred, each work a pair of parallelograms that shift in size and position as the token moves from one digital wallet to another. As in minimalist sculptures, the abstract slabs approximate human bodies. The collector known as CSA2D7 organized an effort to transfer one token from Constant dozens of times, so that the shapes accumulated and squeezed together. It was an example of how a participant can initiate novel behaviors within a system, yielding an outcome Chomko did not expect from the conditions he provided.

Aesthetic Constant could be a springboard for a collector’s initiative, too, though its conditions are somewhat more austere. The initial sale is limited to an invited allowlist of collectors. After minting, each of the collectors has the opportunity to invite another minter, who then can invite another. The maximum edition size of Aesthetic Constant is unbounded, but the final size will depend on what holders of the token choose to do. If they invite fewer friends to mint, then they’ll hold a rare work. But if they invite more, then the project will have a bigger footprint, and more variations in the output. Chomko has written the contract in such a way that collectors will receive a half of the sale price of minters they invite, creating a financial incentive to recruit collectors and rewarding people for building community around the project.

Since the bull market for NFTs of 2021, many collections have baked in mechanics to influence collector behavior. In some cases, these were conceptual engagements with the blockchain as a system. Others were mere marketing gimmicks engineered to maximize speculative trade. The fact is that both approaches recognize that the permissionless and decentralized framework of the blockchain needs some kind of human touch, some injection of meaning, to make people feel involved. NFTs codify and financialize relationships among people and between people and art. Adjustments to the workings of NFTs can make those relationships more meaningful.

The tokens in Aesthetic Constant use the wallet address of the first collector to generate an animation from Chomko’s bank of motion-capture data. After the initial sale, the animations will be derived from a mixture of wallet data, tying the token to the new collector and to the one who invited them. A transfer will alter the animation along the same principle. Aesthetic Constant gives a visual, material form to the way associations and memories take shape when an object changes owners, or when ownership is connected to friendship. As in Constant, the abstract forms stand in for bodies, and their ever-changing forms remind us that behind every wallet is a unique individual. This is a work about identifying something human in a technological system designed to minimize dependence on humanity—a play with blockchain pareidolia. Chomko can still recognize his friends’ unique gaits in those bouncing, bobbing dots. What do they mean to you?

![]()

![]()

Brian Droitcour is the former Editor-In-Chief at Outland, and a former Associate Editor at Art in America. Brian is currently writing Rough Idea, exploring how artists are using emerging technologies, and how these technologies are creating new kinds of artistic communities.

Aesthetic Constant is an NFT project exploring relationship on the blockchain, and is currently open for minting by invitation only at http://aestheticconstant.jonathanchomko.com/

Pareidolia means “false image” in Greek, and it refers to the phenomenon of seeing faces where there aren’t any, in clouds or rocks. The human mind is so eager to recognize the other that it makes faces from nothing. The animations in Jonathan Chomko’s collection Aesthetic Constant are abstractions that play with pattern recognition, though not the kind that evokes the face. The dots that bounce and bob in these animations are based on motion-capture footage that he took of friends walking on a treadmill in his studio. It's pareidolia for the body in motion. These dancing dots are minimal traces of Chomko’s friends, and yet we can recognize them instantly as a document of human movement.

In his work with performance, interactive installations, and digital art, Chomko devises systems that viewers can act within or choreographing a series of actions that make the system legible. The most immediate precedent for Aesthetic Constant is Constant (2024), a collection of NFTs that evolve as they are transferred, each work a pair of parallelograms that shift in size and position as the token moves from one digital wallet to another. As in minimalist sculptures, the abstract slabs approximate human bodies. The collector known as CSA2D7 organized an effort to transfer one token from Constant dozens of times, so that the shapes accumulated and squeezed together. It was an example of how a participant can initiate novel behaviors within a system, yielding an outcome Chomko did not expect from the conditions he provided.

Aesthetic Constant could be a springboard for a collector’s initiative, too, though its conditions are somewhat more austere. The initial sale is limited to an invited allowlist of collectors. After minting, each of the collectors has the opportunity to invite another minter, who then can invite another. The maximum edition size of Aesthetic Constant is unbounded, but the final size will depend on what holders of the token choose to do. If they invite fewer friends to mint, then they’ll hold a rare work. But if they invite more, then the project will have a bigger footprint, and more variations in the output. Chomko has written the contract in such a way that collectors will receive a half of the sale price of minters they invite, creating a financial incentive to recruit collectors and rewarding people for building community around the project.

Since the bull market for NFTs of 2021, many collections have baked in mechanics to influence collector behavior. In some cases, these were conceptual engagements with the blockchain as a system. Others were mere marketing gimmicks engineered to maximize speculative trade. The fact is that both approaches recognize that the permissionless and decentralized framework of the blockchain needs some kind of human touch, some injection of meaning, to make people feel involved. NFTs codify and financialize relationships among people and between people and art. Adjustments to the workings of NFTs can make those relationships more meaningful.

The tokens in Aesthetic Constant use the wallet address of the first collector to generate an animation from Chomko’s bank of motion-capture data. After the initial sale, the animations will be derived from a mixture of wallet data, tying the token to the new collector and to the one who invited them. A transfer will alter the animation along the same principle. Aesthetic Constant gives a visual, material form to the way associations and memories take shape when an object changes owners, or when ownership is connected to friendship. As in Constant, the abstract forms stand in for bodies, and their ever-changing forms remind us that behind every wallet is a unique individual. This is a work about identifying something human in a technological system designed to minimize dependence on humanity—a play with blockchain pareidolia. Chomko can still recognize his friends’ unique gaits in those bouncing, bobbing dots. What do they mean to you?

Brian Droitcour is the former Editor-In-Chief at Outland, and a former Associate Editor at Art in America. Brian is currently writing Rough Idea, exploring how artists are using emerging technologies, and how these technologies are creating new kinds of artistic communities.

Aesthetic Constant is an NFT project exploring relationship on the blockchain, and is currently open for minting by invitation only at http://aestheticconstant.jonathanchomko.com/

January 2025

Discord Chat with CSA_2D7

This transcript is a partially edited conversation between Jonathan Chomko and CSA2D7 regarding Constant 11 (an Ethereum art object), Direct (a performance modifying Constant 11), and CSA Mover (a smart contract on Ethereum written by 0xfff used to automate part of the performance). An unedited second conversation took place in the CSA2D7 Discord between Jonathan and CSA with the intent to simulate a conversation on a bench in McCarren Park similar to a first conversation that took place in-person between Jonathan and CSA a few weeks before, nearby McCarren Park. This medium was partly influenced by Nina Sobell and Emily Hartzell’s “ParkBench, VirtuAlice: Alice Sat Here.”

CSA2D7 Heya Jonathan. Just chilling here ready for you whenever you are

Jonathan Chomko Hey CSA good afternoon!

As I've been thinking about this chat, I wondered how you got into "Constant?”

CSA2D7 Well, the first time I heard about you was through JPG, which was... how to describe it, a decentralized gathering place where Canonites tried to define the next artistic canons for NFTs through social consensus (i.e., arguing, agreeing, voting); founded by MP (María Paula Fernández), Trent (Trent Elmore), and Nic (Nic Hamilton) amongst others. Misha, xsten, WMP, Simon Denny, Chainleft, Tokenfox are all JPG alumni.

So I kind of followed your work from a distance. It was your work “Natural Static” w/ Brian Droitcour and JPG where you kind of popped up again on my radar

And then when you announced "Constant" on X, I got really interested. It kind of hit on some interesting things that were happening in the scene at the time - esp. the onchain aspect

Now that you've had some time to kind of gain some distance from the release of the project, how do you think about it (now vs. then)?

Jonathan Chomko Nice I love JPG, they are a good crew of people!

When I was working on "Constant" there were a few themes I was focusing on; the idea of representing relationships between people on the blockchain, and the idea of making a work that is not static; that changes each time it's transferred.

Usually when I get close to releasing a project I start thinking a lot about elements that are perhaps not central to my interest in the project - I think about how the market will respond, whether it has enough generative variation, etc.

I'm also realizing the more I release projects, the easier it is to try to put too many ideas into one project, and it's better to keep things simple if possible. Let each project ring like a bell, if it can

But looking back now, I'm happy with the decisions I made, and that the ideas resonated with certain people, yourself included!

CSA2D7 It certainly resonated with me. At our last meeting you talked about its aesthetics and how you didn't think it was necessarily an aesthetically-pleasing piece. Can you talk a little bit about how you thought about the project? You mentioned some of Harm Van Den Dorpel's work as a potential route you didn't take

Jonathan Chomko Yeah, I'm happy it did!

In the post, I wrote that I didn't take the Harm-esque approach because it was too visually dense. I was also interested in pure composition. It's very easy in generative art to have lots of elements, and they signal complexity and value, and I felt like I had done that gesture in Natural Static

What I meant about aesthetics is that this work doesn't primarily make an appeal to value through its aesthetics. I feel like a lot of generative art says 'look at what I can do with code, look at the complexity I can achieve with code'. And "Constant" doesn't do that - the aesthetics are there as one part of a larger gesture.

The aesthetics are kinda there to only illustrate the blockchain dynamics, and to point to a sort of relationality that blockchain enables.

I'd be curious to know more about when you decided to embark on the building up of “Constant 11”.

CSA2D7 Yes! The aesthetics illustrate blockchain dynamics, and that relationality is how I always saw the pieces, as building blocks, and that’s why using “Constant 11,” with each 1 representing the two starting blocks was kind of a sign for me.

This was something I was starting to think about when “Constant 0” started to get passed around amongst artists, including Rhea Myers, who was a significant influence on “Constant.” The figure of “0” also matches the circle we made (starting with the original transfer, Jonathan to CSA, and ending with Jonathan to CSA).

With respect to “11,” I had to wait for the collection size to reach that magic number before I could acquire it.

Blockchain dynamics aside, there is an aesthetic similarity to Vera Molnár's work “100 trapèzes” when “Constant 11” starts off (Vera’s work posted below for reference)

And w/ Vera's work you are thinking about motion (trapeze), but her work is static

And in her work there is a distance between each of the blocks, whereas you intentionally have the blocks touching?

![]()

Jonathan Chomko I was so happy to see you post this work of Vera's, I hadn't seen it before! I can also see figures in her work, relationships.

Having the blocks touch was a key part of my concept from the beginning. I was thinking about relationship dynamics, and how two figures touching or leaning on each other can represent those dynamics.

When the piece is first minted, there are two blocks, representing the wallet address of the previous and current minter. The original idea was that there would only be two figures, and each time the piece changed hands the composition would shift.

With only two figures I was worried that the transfer might not be visible, so I made the transfer additive, each new transfer adding a block to the composition. The piece is responsive, so in theory the work can be scaled to allow each block to be full size, but in the window of an NFT marketplace the work becomes squished, horizontally compressed.

I didn’t actually think anyone would transfer it more than a few times, but I was really excited to see what people did. You organized a transfer of “Constant 0” to a whole group of different artists and collectors, which created a lovely organic pattern. And 0xfff made some interesting transfers as well, sending it to themselves to create repeating patterns.

With “Constant 11” it’s been really fun to see the different modes express themselves in the pattern.

CSA2D7 Yea! I mean John Gerrard did a talk in here recently, asking how do we (the crypto, nft, token folk) link back to the Algorists, and this aesthetic relationship to Vera’s work popped in my head. CSA note: John’s talk with Cody Edison on December 20, 2024 in the CSA Discord, “The Last Slides,” completed John’s original lecture at the Digital Art Symposium, LACMA’s Art and Tech Summit in Miami that took place a few weeks before on December 3, 2024.

For all the motion Vera's piece intends to convey, it is completely still and unchanging - of course there were limits to what she was able to do in her time

But that's kind of where I get excited about “Direct” and "Constant"

The idea of building this piece that is visually simple but almost incomprehensible. Is that really 118 transfers? How are we going to understand it visually if it gets exponentially bigger?

Just like seeing the Himalayas up close, we see it but can we really comprehend how dense it is with just our eyes?

So, in this sense, “Constant 11” is for thinking about the blockchain, about things we can't really comprehend, and there's just a simpleness to the project, aesthetically, and conceptually that tie it together

Jonathan Chomko That's really nice to read! I think one of the things that keeps on drawing me back to blockchain work is that there is interaction that is possible with other people. You put up a proposition, and people meet you on the other side and carry the work on.

CSA2D7 Absolutely. This is not right-click-save art, right? I mean, I made a comment to you a little while back that I wanted this work to be the largest sculpture on the blockchain. You kind of laughed it off, because honestly, who knows what that means? But it's the direction we are trying to seek, right? Aim for Richard Serra and Richard Smithson, large; abstract; sculpture; in a way that is only possible on the blockchain through this participatory performance. “Work comes out of work.”

Can you talk a little bit about your experience with blockchain work (including past projects) because I feel like you are right in it. And we should definitely have 0xfff comment on that in the future, because they too are right in it and created the automated portion of “Direct” (w/ their contract “CSA Mover”) specifically for “Constant 11.” They were also part of the original group of artists in “Constant 0.”

Jonathan Chomko Yes, very grateful for 0xfff's contributions, they were also a big supporter of the project initially

CSA2D7 Yes, and 0xfff started the self-transfer (which you previously mentioned) which we homaged in one of the early phases as well

Jonathan Chomko Yeah 0xfff did a lot of fun experiments! I've been doing blockchain stuff since about 2021. I think I've always been curious about where the value is in art on the blockchain.

My first big project was Proof of Work, which I feel was asking the question 'is a work valuable because a lot of effort has been put in it?'

I feel like "Constant" is saying that value lies in relationships; that it's in the space between buyer and seller that value exists.

CSA2D7 On the "Constant" webpage, there's actually a little generator to test out what "Constant" would look like after X transfers. For me it always stopped working after like 27 transfers or something... but that's clearly not an issue right now for “Constant 11” right? We're at 118 or so, and it is currently in John Gerrard’s possession. Part of testing for me was that little generator, and “0.”

Jonathan Chomko On the "Constant" page the simulator uses a pre-set array of wallet addresses, so it might stop working when that array is exhausted. But "Constant" is rendered on-chain differently from say “Draw by Computer” - I've calculated that it should be able to render up to about 7,000 transfers

CSA2D7 7,000 transfers is a lot. If we reach 7,000... I think we should call up Sotheby's or Christie's

Jonathan Chomko Haha yeah 7,000 is very many

CSA2D7 You wrote: "You put up a proposition, and people meet you on the other side and carry the work on." and I think that participatory aspect is really significant to "Constant"

What we're doing with “Direct,” is kind of a natural extension of "Constant", right - but I think where we might differ is how we see that relationship?

I think I read somewhere that you saw it was both kind of a superficial thing - its just the wallet, but I think I see of it as something more - ownership

Jonathan Chomko I think what I meant was that the wallet address doesn't represent a whole person

CSA2D7 Yes! Absolutely. It doesn't

And I agree, but I think there's a transactional depth to letting someone hold "you" (or your artwork, a piece of you) in their wallet and determine the disposition of that thing

Jonathan Chomko Yeah there definitely is! Esp as the work gets denser and denser

CSA2D7 Like when we think about control - you mentioned at coffee that technically, the piece is not generated through the EVM but your server (you can explain this better than me)

So there is still an aspect of control that you theoretically have over the piece as it moves -yes? And this is the nature of some NFTs where the original author still retains some control over how the work appears (esp. if it's a pointer with IPFS)?

Even if its not in the original author's wallet

Jonathan Chomko I don't actually have any control over the work via the contract, whoever's wallet it's in has full authority to send it or keep it

CSA2D7 Agreed - but you have the ability to shut off your server and control how the work appears?

Jonathan Chomko Yeah to some degree - the way "Constant" is rendered is that an html file with an embedded javascript script is generated on the contract.

The image link in the NFT's json points towards my server, which runs the javascript on the generated html page, and returns the SVG to the marketplace.

So there is an intermediary render step, but this render step could also be done by a standalone / arweave hosted page that would provide an unmediated view of what is on the contract, fully out of my control.

CSA2D7 I see, so you're saying if your server goes down, if we also have a standalone / arweave image, that arweave image would not be affected

Jonathan Chomko yeah exactly

and that this idea of transaction depth or trust is still there - there is no recourse if someone doesn't transfer it properly, or just keeps it

CSA2D7 This is still a form of influence / control though

Agreed. I was just trying to get to the contours of the influence / control that each participant has over the piece

I think there's a technical influence that you as the original creator have

And there's also probably a stronger reputational influence that the project has

i.e., who wants to be the person that steals “Constant 11” - although it's possible. I don't know all of the participants. And that’s part of what makes the art object and concept seductive - we are aiming for the largest, the densest, but at any moment the flipside could occur - it could be lost, it could get stolen, it could get halted. And what does it mean to “lose” something, if we can still track it and see it. Is it lost if it's forever viewable as public art?

That question of loss aside, and the two aforementioned influences aside, there is this really deep trust and control that each participant has over the work when they are in possession of it. There is this list that the participants can choose from (generated through my account on X), but they don’t necessarily have to. I was speaking with John Gerrard about this, and he was cycling between a couple of interesting choices. You don’t have to go with the list, if you feel certain about a choice.

And that's part of what makes it an interesting work. Right, if we just went with phase 2, the “CSA Mover” contract that 0xfff created for all of the transfers we wouldn't have this test of what it means to possess a thing, or what it means to lose a thing

If we had relied solely on “CSA Mover,” all of the participants who wanted to take part could just join 0xfff's contract and let me automate the movement without their being a test of ownership

Jonathan Chomko I see yeah! But I think it was important for you to have people hold it and make the choice

new

CSA2D7 I think that's part of the performance that I want to stress in this manual phase, that each participant is choosing the next one, it's a form of, a kind of, social trust that's pretty strong right?

It's like a form of decentralized KYC to an extent

Are you going to pass to someone you don't know, or someone that you do know, and how do you know them, etc.

Each transfer has a little story (or maybe a large story) behind it, like starting off this manual transfer phase with Nina Roehrs, who I have distance from, but also recognize as an important figure in this token-as-art movement

Jonathan Chomko Yeah - we can see paths of trust in how it's transferred

CSA2D7 It's such a simple project but there are so many things you can kind of see. It's kind of an ethnography of this current scene we are in

A lot of which is mediated through X and Discord... btw I saw on X that you have a new project called “Aesthetic Constant?”

Jonathan Chomko Something that I realized in our IRL conversation is how much the blockchain record is part of the piece. Like someone could generate an aesthetically similar piece, but the eventual value of this piece is in its history, which cannot be faked

Yeah “Aesthetic Constant!”

CSA2D7 Its funny because one of the criticisms I've seen or maybe felt on the project is that things in the past have existed that are similar to this participation (Alighiero Boetti, “Viaggi postali (Postal Voyages),” On Kawara, “I got up,” etc. etc.) from the 1960’s

In the Rhizome discord, I saw someone mention that they had an instagram project which posted all of the DMs they received on their public page before they got deleted by Instagram

But I see “Direct” as very distinct from these projects; despite centralized social media (X, and Discord) playing a function and being a part of it; “Direct” is still very much about decentralized participation

Jonathan Chomko I think it's valid to compare it to other types of communication art, but I think it's a gesture that is worth making again on the blockchain, partly because the art can be dynamic, and because of the implications of value and trust.

CSA2D7 Absolutely. It's decentralized, and on the blockchain. This will be an art object at the end of the day that I think has no precedent.

Jonathan Chomko I'm really grateful for the work you're putting in to realize this vision!

CSA2D7It’s the three of us, and all the participants

Jonathan Chomko

True! a collective work

CSA2D7 Constant aside - I was asking you about “Aesthetic Constant?”

Jonathan Chomko Yeah! My vision with the “Aesthetic Constant” is to release a series of the walking animations I've been posting.

CSA2D7 Yes! I love the walking animations that you previously released with Mack - please tell us about that too and how it informs (if it does) “Aesthetic Constant”

Jonathan Chomk The previous animations were recorded from my Shadowing installation. Working on that release I was reminded of how easy it is for us to understand human motion, even with very small amounts of data. It’s one of the patterns are brains are wired to recognize.

“Aesthetic Constant” also builds on “Draw by Computer,” in that they will be on-chain generated animated SVGs.

CSA2D7 Yes! I thought there was this aesthetic link there (and conceptual)

Jonathan Chomko Yeah! I'm also thinking of release dynamics that build on what we're doing here with "Constant"

I think it'll be only allowlist for primary mint, and then each holder gets to invite one person to mint 'beside' them.

CSA2D7 I see “Shadowing,” “Draw by Computer,” and “Constant” in it

Jonathan Chomko Yeah it has all those parts! The final idea is that each participant can also choose to end their cycle instead of inviting someone to mint, so the holders have some control of the supply

CSA2D7 I kind of like having this format, it's a little weird but also forces us to respond organically to each other (vs email or something)

We also have no idea if someone is here or not, if someone will interrupt us or not, but also we're maybe performing or not lol who knows

I felt a little uptight to start, and now feel a little looser

Jonathan Chomko yeah I think it's good, also allows ppl to drop in and out, but also easily scan the convo

CSA2D7 Schrödinger's audience

CSA2D7

0xfff

Constant 11

https://constant.jonathanchomko.com/

This transcript is a partially edited conversation between Jonathan Chomko and CSA2D7 regarding Constant 11 (an Ethereum art object), Direct (a performance modifying Constant 11), and CSA Mover (a smart contract on Ethereum written by 0xfff used to automate part of the performance). An unedited second conversation took place in the CSA2D7 Discord between Jonathan and CSA with the intent to simulate a conversation on a bench in McCarren Park similar to a first conversation that took place in-person between Jonathan and CSA a few weeks before, nearby McCarren Park. This medium was partly influenced by Nina Sobell and Emily Hartzell’s “ParkBench, VirtuAlice: Alice Sat Here.”

CSA2D7 Heya Jonathan. Just chilling here ready for you whenever you are

Jonathan Chomko Hey CSA good afternoon!

As I've been thinking about this chat, I wondered how you got into "Constant?”

CSA2D7 Well, the first time I heard about you was through JPG, which was... how to describe it, a decentralized gathering place where Canonites tried to define the next artistic canons for NFTs through social consensus (i.e., arguing, agreeing, voting); founded by MP (María Paula Fernández), Trent (Trent Elmore), and Nic (Nic Hamilton) amongst others. Misha, xsten, WMP, Simon Denny, Chainleft, Tokenfox are all JPG alumni.

So I kind of followed your work from a distance. It was your work “Natural Static” w/ Brian Droitcour and JPG where you kind of popped up again on my radar

And then when you announced "Constant" on X, I got really interested. It kind of hit on some interesting things that were happening in the scene at the time - esp. the onchain aspect

Now that you've had some time to kind of gain some distance from the release of the project, how do you think about it (now vs. then)?

Jonathan Chomko Nice I love JPG, they are a good crew of people!

When I was working on "Constant" there were a few themes I was focusing on; the idea of representing relationships between people on the blockchain, and the idea of making a work that is not static; that changes each time it's transferred.

Usually when I get close to releasing a project I start thinking a lot about elements that are perhaps not central to my interest in the project - I think about how the market will respond, whether it has enough generative variation, etc.

I'm also realizing the more I release projects, the easier it is to try to put too many ideas into one project, and it's better to keep things simple if possible. Let each project ring like a bell, if it can

But looking back now, I'm happy with the decisions I made, and that the ideas resonated with certain people, yourself included!

CSA2D7 It certainly resonated with me. At our last meeting you talked about its aesthetics and how you didn't think it was necessarily an aesthetically-pleasing piece. Can you talk a little bit about how you thought about the project? You mentioned some of Harm Van Den Dorpel's work as a potential route you didn't take

Jonathan Chomko Yeah, I'm happy it did!

In the post, I wrote that I didn't take the Harm-esque approach because it was too visually dense. I was also interested in pure composition. It's very easy in generative art to have lots of elements, and they signal complexity and value, and I felt like I had done that gesture in Natural Static

What I meant about aesthetics is that this work doesn't primarily make an appeal to value through its aesthetics. I feel like a lot of generative art says 'look at what I can do with code, look at the complexity I can achieve with code'. And "Constant" doesn't do that - the aesthetics are there as one part of a larger gesture.

The aesthetics are kinda there to only illustrate the blockchain dynamics, and to point to a sort of relationality that blockchain enables.

I'd be curious to know more about when you decided to embark on the building up of “Constant 11”.

CSA2D7 Yes! The aesthetics illustrate blockchain dynamics, and that relationality is how I always saw the pieces, as building blocks, and that’s why using “Constant 11,” with each 1 representing the two starting blocks was kind of a sign for me.

This was something I was starting to think about when “Constant 0” started to get passed around amongst artists, including Rhea Myers, who was a significant influence on “Constant.” The figure of “0” also matches the circle we made (starting with the original transfer, Jonathan to CSA, and ending with Jonathan to CSA).

With respect to “11,” I had to wait for the collection size to reach that magic number before I could acquire it.

Blockchain dynamics aside, there is an aesthetic similarity to Vera Molnár's work “100 trapèzes” when “Constant 11” starts off (Vera’s work posted below for reference)

And w/ Vera's work you are thinking about motion (trapeze), but her work is static

And in her work there is a distance between each of the blocks, whereas you intentionally have the blocks touching?

Jonathan Chomko I was so happy to see you post this work of Vera's, I hadn't seen it before! I can also see figures in her work, relationships.

Having the blocks touch was a key part of my concept from the beginning. I was thinking about relationship dynamics, and how two figures touching or leaning on each other can represent those dynamics.

When the piece is first minted, there are two blocks, representing the wallet address of the previous and current minter. The original idea was that there would only be two figures, and each time the piece changed hands the composition would shift.

With only two figures I was worried that the transfer might not be visible, so I made the transfer additive, each new transfer adding a block to the composition. The piece is responsive, so in theory the work can be scaled to allow each block to be full size, but in the window of an NFT marketplace the work becomes squished, horizontally compressed.

I didn’t actually think anyone would transfer it more than a few times, but I was really excited to see what people did. You organized a transfer of “Constant 0” to a whole group of different artists and collectors, which created a lovely organic pattern. And 0xfff made some interesting transfers as well, sending it to themselves to create repeating patterns.

With “Constant 11” it’s been really fun to see the different modes express themselves in the pattern.

CSA2D7 Yea! I mean John Gerrard did a talk in here recently, asking how do we (the crypto, nft, token folk) link back to the Algorists, and this aesthetic relationship to Vera’s work popped in my head. CSA note: John’s talk with Cody Edison on December 20, 2024 in the CSA Discord, “The Last Slides,” completed John’s original lecture at the Digital Art Symposium, LACMA’s Art and Tech Summit in Miami that took place a few weeks before on December 3, 2024.

For all the motion Vera's piece intends to convey, it is completely still and unchanging - of course there were limits to what she was able to do in her time

But that's kind of where I get excited about “Direct” and "Constant"

The idea of building this piece that is visually simple but almost incomprehensible. Is that really 118 transfers? How are we going to understand it visually if it gets exponentially bigger?

Just like seeing the Himalayas up close, we see it but can we really comprehend how dense it is with just our eyes?

So, in this sense, “Constant 11” is for thinking about the blockchain, about things we can't really comprehend, and there's just a simpleness to the project, aesthetically, and conceptually that tie it together

Jonathan Chomko That's really nice to read! I think one of the things that keeps on drawing me back to blockchain work is that there is interaction that is possible with other people. You put up a proposition, and people meet you on the other side and carry the work on.

CSA2D7 Absolutely. This is not right-click-save art, right? I mean, I made a comment to you a little while back that I wanted this work to be the largest sculpture on the blockchain. You kind of laughed it off, because honestly, who knows what that means? But it's the direction we are trying to seek, right? Aim for Richard Serra and Richard Smithson, large; abstract; sculpture; in a way that is only possible on the blockchain through this participatory performance. “Work comes out of work.”

Can you talk a little bit about your experience with blockchain work (including past projects) because I feel like you are right in it. And we should definitely have 0xfff comment on that in the future, because they too are right in it and created the automated portion of “Direct” (w/ their contract “CSA Mover”) specifically for “Constant 11.” They were also part of the original group of artists in “Constant 0.”

Jonathan Chomko Yes, very grateful for 0xfff's contributions, they were also a big supporter of the project initially

CSA2D7 Yes, and 0xfff started the self-transfer (which you previously mentioned) which we homaged in one of the early phases as well

Jonathan Chomko Yeah 0xfff did a lot of fun experiments! I've been doing blockchain stuff since about 2021. I think I've always been curious about where the value is in art on the blockchain.

My first big project was Proof of Work, which I feel was asking the question 'is a work valuable because a lot of effort has been put in it?'

I feel like "Constant" is saying that value lies in relationships; that it's in the space between buyer and seller that value exists.

CSA2D7 On the "Constant" webpage, there's actually a little generator to test out what "Constant" would look like after X transfers. For me it always stopped working after like 27 transfers or something... but that's clearly not an issue right now for “Constant 11” right? We're at 118 or so, and it is currently in John Gerrard’s possession. Part of testing for me was that little generator, and “0.”

Jonathan Chomko On the "Constant" page the simulator uses a pre-set array of wallet addresses, so it might stop working when that array is exhausted. But "Constant" is rendered on-chain differently from say “Draw by Computer” - I've calculated that it should be able to render up to about 7,000 transfers

CSA2D7 7,000 transfers is a lot. If we reach 7,000... I think we should call up Sotheby's or Christie's

Jonathan Chomko Haha yeah 7,000 is very many

CSA2D7 You wrote: "You put up a proposition, and people meet you on the other side and carry the work on." and I think that participatory aspect is really significant to "Constant"

What we're doing with “Direct,” is kind of a natural extension of "Constant", right - but I think where we might differ is how we see that relationship?

I think I read somewhere that you saw it was both kind of a superficial thing - its just the wallet, but I think I see of it as something more - ownership

Jonathan Chomko I think what I meant was that the wallet address doesn't represent a whole person

CSA2D7 Yes! Absolutely. It doesn't

And I agree, but I think there's a transactional depth to letting someone hold "you" (or your artwork, a piece of you) in their wallet and determine the disposition of that thing

Jonathan Chomko Yeah there definitely is! Esp as the work gets denser and denser

CSA2D7 Like when we think about control - you mentioned at coffee that technically, the piece is not generated through the EVM but your server (you can explain this better than me)

So there is still an aspect of control that you theoretically have over the piece as it moves -yes? And this is the nature of some NFTs where the original author still retains some control over how the work appears (esp. if it's a pointer with IPFS)?

Even if its not in the original author's wallet

Jonathan Chomko I don't actually have any control over the work via the contract, whoever's wallet it's in has full authority to send it or keep it

CSA2D7 Agreed - but you have the ability to shut off your server and control how the work appears?

Jonathan Chomko Yeah to some degree - the way "Constant" is rendered is that an html file with an embedded javascript script is generated on the contract.

The image link in the NFT's json points towards my server, which runs the javascript on the generated html page, and returns the SVG to the marketplace.

So there is an intermediary render step, but this render step could also be done by a standalone / arweave hosted page that would provide an unmediated view of what is on the contract, fully out of my control.

CSA2D7 I see, so you're saying if your server goes down, if we also have a standalone / arweave image, that arweave image would not be affected

Jonathan Chomko yeah exactly

and that this idea of transaction depth or trust is still there - there is no recourse if someone doesn't transfer it properly, or just keeps it

CSA2D7 This is still a form of influence / control though

Agreed. I was just trying to get to the contours of the influence / control that each participant has over the piece

I think there's a technical influence that you as the original creator have

And there's also probably a stronger reputational influence that the project has

i.e., who wants to be the person that steals “Constant 11” - although it's possible. I don't know all of the participants. And that’s part of what makes the art object and concept seductive - we are aiming for the largest, the densest, but at any moment the flipside could occur - it could be lost, it could get stolen, it could get halted. And what does it mean to “lose” something, if we can still track it and see it. Is it lost if it's forever viewable as public art?

That question of loss aside, and the two aforementioned influences aside, there is this really deep trust and control that each participant has over the work when they are in possession of it. There is this list that the participants can choose from (generated through my account on X), but they don’t necessarily have to. I was speaking with John Gerrard about this, and he was cycling between a couple of interesting choices. You don’t have to go with the list, if you feel certain about a choice.

And that's part of what makes it an interesting work. Right, if we just went with phase 2, the “CSA Mover” contract that 0xfff created for all of the transfers we wouldn't have this test of what it means to possess a thing, or what it means to lose a thing

If we had relied solely on “CSA Mover,” all of the participants who wanted to take part could just join 0xfff's contract and let me automate the movement without their being a test of ownership

Jonathan Chomko I see yeah! But I think it was important for you to have people hold it and make the choice

new

CSA2D7 I think that's part of the performance that I want to stress in this manual phase, that each participant is choosing the next one, it's a form of, a kind of, social trust that's pretty strong right?

It's like a form of decentralized KYC to an extent

Are you going to pass to someone you don't know, or someone that you do know, and how do you know them, etc.

Each transfer has a little story (or maybe a large story) behind it, like starting off this manual transfer phase with Nina Roehrs, who I have distance from, but also recognize as an important figure in this token-as-art movement

Jonathan Chomko Yeah - we can see paths of trust in how it's transferred

CSA2D7 It's such a simple project but there are so many things you can kind of see. It's kind of an ethnography of this current scene we are in

A lot of which is mediated through X and Discord... btw I saw on X that you have a new project called “Aesthetic Constant?”

Jonathan Chomko Something that I realized in our IRL conversation is how much the blockchain record is part of the piece. Like someone could generate an aesthetically similar piece, but the eventual value of this piece is in its history, which cannot be faked

Yeah “Aesthetic Constant!”

CSA2D7 Its funny because one of the criticisms I've seen or maybe felt on the project is that things in the past have existed that are similar to this participation (Alighiero Boetti, “Viaggi postali (Postal Voyages),” On Kawara, “I got up,” etc. etc.) from the 1960’s

In the Rhizome discord, I saw someone mention that they had an instagram project which posted all of the DMs they received on their public page before they got deleted by Instagram

But I see “Direct” as very distinct from these projects; despite centralized social media (X, and Discord) playing a function and being a part of it; “Direct” is still very much about decentralized participation

Jonathan Chomko I think it's valid to compare it to other types of communication art, but I think it's a gesture that is worth making again on the blockchain, partly because the art can be dynamic, and because of the implications of value and trust.

CSA2D7 Absolutely. It's decentralized, and on the blockchain. This will be an art object at the end of the day that I think has no precedent.

Jonathan Chomko I'm really grateful for the work you're putting in to realize this vision!

CSA2D7It’s the three of us, and all the participants

Jonathan Chomko

True! a collective work

CSA2D7 Constant aside - I was asking you about “Aesthetic Constant?”

Jonathan Chomko Yeah! My vision with the “Aesthetic Constant” is to release a series of the walking animations I've been posting.

CSA2D7 Yes! I love the walking animations that you previously released with Mack - please tell us about that too and how it informs (if it does) “Aesthetic Constant”

Jonathan Chomk The previous animations were recorded from my Shadowing installation. Working on that release I was reminded of how easy it is for us to understand human motion, even with very small amounts of data. It’s one of the patterns are brains are wired to recognize.

“Aesthetic Constant” also builds on “Draw by Computer,” in that they will be on-chain generated animated SVGs.

CSA2D7 Yes! I thought there was this aesthetic link there (and conceptual)

Jonathan Chomko Yeah! I'm also thinking of release dynamics that build on what we're doing here with "Constant"

I think it'll be only allowlist for primary mint, and then each holder gets to invite one person to mint 'beside' them.

CSA2D7 I see “Shadowing,” “Draw by Computer,” and “Constant” in it

Jonathan Chomko Yeah it has all those parts! The final idea is that each participant can also choose to end their cycle instead of inviting someone to mint, so the holders have some control of the supply

CSA2D7 I kind of like having this format, it's a little weird but also forces us to respond organically to each other (vs email or something)

We also have no idea if someone is here or not, if someone will interrupt us or not, but also we're maybe performing or not lol who knows

I felt a little uptight to start, and now feel a little looser

Jonathan Chomko yeah I think it's good, also allows ppl to drop in and out, but also easily scan the convo

CSA2D7 Schrödinger's audience

CSA2D7

0xfff

Constant 11

https://constant.jonathanchomko.com/

May 2024

Constant

“there are two strains of *nft art* emerging - one is an extension of postinternet as the production of unique images using the legder as means of distribution. The other strain is creating experiments with the material (code) and structure of the chain itself. Image vs Process” - @brachlandberlin

Constant grew from a few ideas; one was a return to the topic of symmetry, explored in Token Hash among others, and another the idea for a new mechanic of using wallet address as seeds for a generative artwork.

My first explorations stemmed from Natural Static, mirroring random pixels to reveal the inherent lack of randomness in their distribution.

![]()

![]()

These tests were interesting, but one thing I wanted to bring to Constant from my previous work with Natural Static was an understanding of the context. Most viewers will encounter the work as a thumbnail on a NFT marketplace, where density gets lost.

![]()

![]()

I reduced the grid of pixels to a line traced through random points, and then mirrored this line, adding small random variations. The two figures reminded me of my choreographic work, how having only two dancers on stage drew thoughts to the relationship between them.

![]()

![]()

I also explored pixel grid forms, with some Harm van den Dorpel-esque imperfect symmetry studies, but this level of density felt like territory I had explored before, with Proof of Work and Natural Static.

![]()

![]()

I liked the gesture of moving from the density of Natural Static to the simplicity of two forms.

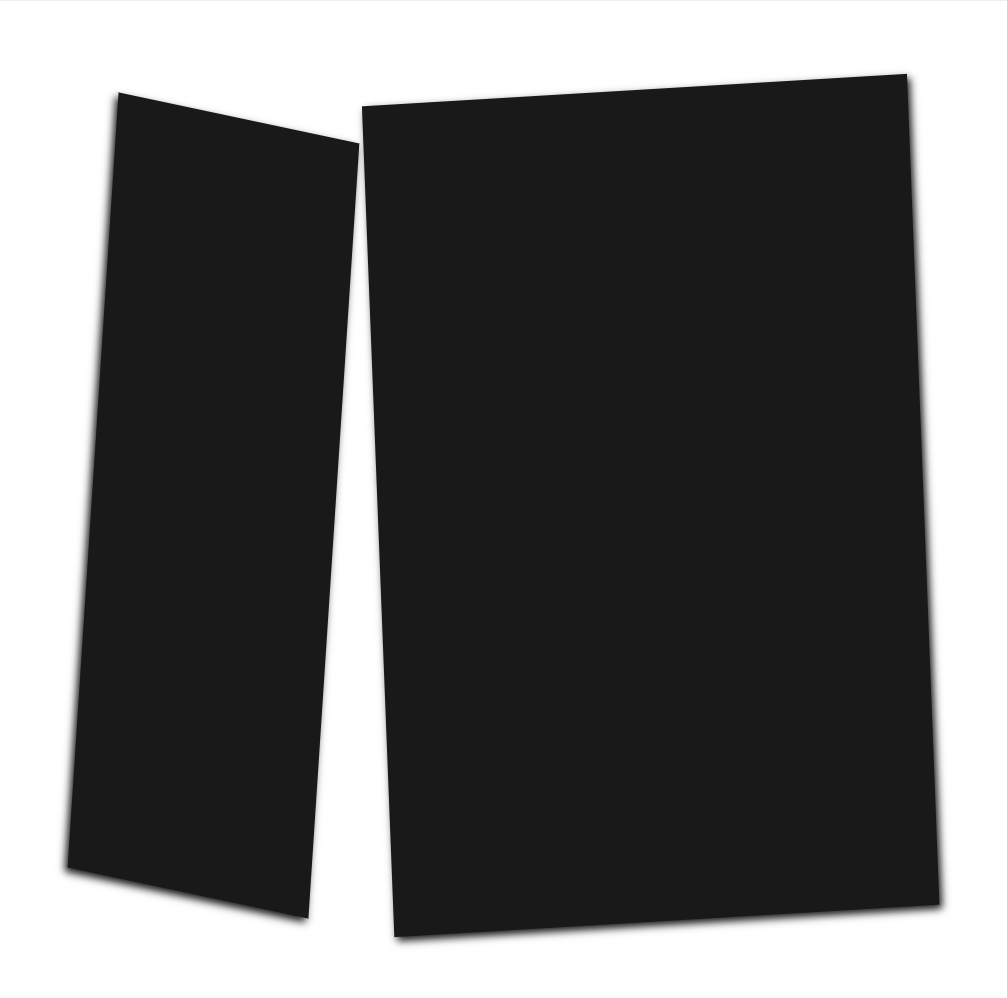

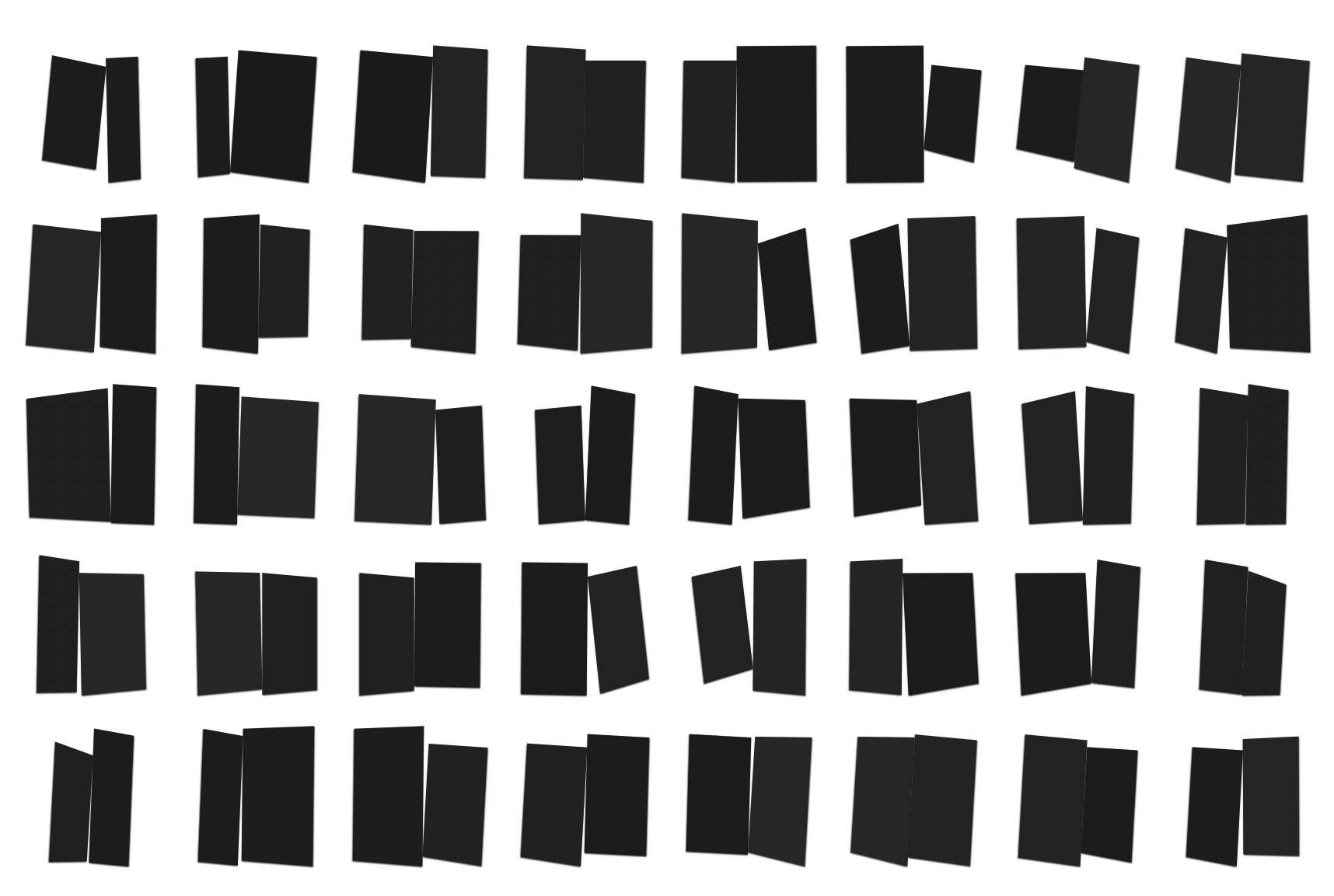

I began working with rectangles and rotation, and saw a clear relational dynamic between them; who takes more space, who is leaning on who, where is the connection?

Throughout this process I was set on the idea that the visual content of the work would be derived from the wallet address of two participants; previous and current minter, or seller and buyer.

This image below shows how Constant would evolve during the mint; each new minted piece contains an element of the one before it.

![]()

Making a work that changes when it is transferred breaks the fundamental value proposition of NFTs; that they are stable artworks that can be bought and sold. Allowing the work to evolve over time changes this value proposition, and points to the relationships between artists and collectors as the source of value.

Throughout my practice, the visual content of a work has not been my primary interest. What excites me more is the creation of a protocol that enables connection, or shapes an experience.

Shadowing is a good example of this. The system captures the movements of people under a streetlamp, and plays them back as shadows. The visual of language of the work is there to signal that your participation was recorded, and to show you the movements and gestures others performed under the lampl. The greatest joy of the project was not in creating the visual language of it but was in watching people play under the lamp.

My favourite works from the NFT canon are not those that make pretty pictures with code; they are those that use the affordances of the blockchain to create propositions with pleasing tension; Stevie P’s Money Making Opportunity, or Rhea Myers Proof of Existence come to mind.

In a recent inteview in Outland, Myers stated that the point of her work is to raise the negative; claiming that a hash on the blockchain is proof of existence immediately raises objections.

“The objections are the point. That’s the point of the artwork—this doesn’t work. Let’s think about why not.“ Outland, 2024

Constant works in a similar way - wallet addresses are not equivalent to their owners complex personalities, and blockchain transactions are not equivalent to real connection.

But in creating a work that shifts each time it changes changes hands echoes the fragility of our real relationships, and reminds us that behind each blockchain address is you and me.

![]()

![]()

![]()

![]()

Constant opens May 29 at 1pm EST on constant.jonathanchomko.com

“there are two strains of *nft art* emerging - one is an extension of postinternet as the production of unique images using the legder as means of distribution. The other strain is creating experiments with the material (code) and structure of the chain itself. Image vs Process” - @brachlandberlin

Constant grew from a few ideas; one was a return to the topic of symmetry, explored in Token Hash among others, and another the idea for a new mechanic of using wallet address as seeds for a generative artwork.

My first explorations stemmed from Natural Static, mirroring random pixels to reveal the inherent lack of randomness in their distribution.

These tests were interesting, but one thing I wanted to bring to Constant from my previous work with Natural Static was an understanding of the context. Most viewers will encounter the work as a thumbnail on a NFT marketplace, where density gets lost.

I reduced the grid of pixels to a line traced through random points, and then mirrored this line, adding small random variations. The two figures reminded me of my choreographic work, how having only two dancers on stage drew thoughts to the relationship between them.

I also explored pixel grid forms, with some Harm van den Dorpel-esque imperfect symmetry studies, but this level of density felt like territory I had explored before, with Proof of Work and Natural Static.

I liked the gesture of moving from the density of Natural Static to the simplicity of two forms.

I began working with rectangles and rotation, and saw a clear relational dynamic between them; who takes more space, who is leaning on who, where is the connection?

Throughout this process I was set on the idea that the visual content of the work would be derived from the wallet address of two participants; previous and current minter, or seller and buyer.

This image below shows how Constant would evolve during the mint; each new minted piece contains an element of the one before it.

Making a work that changes when it is transferred breaks the fundamental value proposition of NFTs; that they are stable artworks that can be bought and sold. Allowing the work to evolve over time changes this value proposition, and points to the relationships between artists and collectors as the source of value.

Throughout my practice, the visual content of a work has not been my primary interest. What excites me more is the creation of a protocol that enables connection, or shapes an experience.

Shadowing is a good example of this. The system captures the movements of people under a streetlamp, and plays them back as shadows. The visual of language of the work is there to signal that your participation was recorded, and to show you the movements and gestures others performed under the lampl. The greatest joy of the project was not in creating the visual language of it but was in watching people play under the lamp.

My favourite works from the NFT canon are not those that make pretty pictures with code; they are those that use the affordances of the blockchain to create propositions with pleasing tension; Stevie P’s Money Making Opportunity, or Rhea Myers Proof of Existence come to mind.

In a recent inteview in Outland, Myers stated that the point of her work is to raise the negative; claiming that a hash on the blockchain is proof of existence immediately raises objections.

“The objections are the point. That’s the point of the artwork—this doesn’t work. Let’s think about why not.“ Outland, 2024

Constant works in a similar way - wallet addresses are not equivalent to their owners complex personalities, and blockchain transactions are not equivalent to real connection.

But in creating a work that shifts each time it changes changes hands echoes the fragility of our real relationships, and reminds us that behind each blockchain address is you and me.

Constant opens May 29 at 1pm EST on constant.jonathanchomko.com

Natural Static

NFT series, 2023, 260 tokens

NFT series, 2023, 260 tokens

Natural Static feeds videos of natural motion feeds into a pixel-based water simulation, creating a representation of physical motion that is both highly digital and deeply analog.

Click Generate on the embedded version below and see the range of the system, or use the full-frame generator.

Natural Static was released with JPG alongside an online exhibition curated by Brian Droitcour and was the subject of a solo exhibition at Public Works Administration in New York City in October 2023. View minted works here.

![]()

![]()

![]()

![]()

Traits

The piece uses WebGL to turn each pixel on your screen into its own feedback system. Each pixel has a high probability of sampling its previous state, and a low probability of sampling the underlying video.

Information on the filming location, weather and time of day for video can be obtained by calling getVideoInfo(tokenId) on the contract. In the case a video file is lost, the artist authorizes the collector to re-create the video using the parameters in the video info file.

Click Generate on the embedded version below and see the range of the system, or use the full-frame generator.

Natural Static was released with JPG alongside an online exhibition curated by Brian Droitcour and was the subject of a solo exhibition at Public Works Administration in New York City in October 2023. View minted works here.

Traits

The piece uses WebGL to turn each pixel on your screen into its own feedback system. Each pixel has a high probability of sampling its previous state, and a low probability of sampling the underlying video.

- Feedback Type

- Increment - pixels are incremented until they reach max brightness , then roll over to min brightness.

- Decrement - pixels are decremented until they reach min brightness, then roll over to max brightness.

- RGBIncrement - the red, green and blue channels of each pixel are incremented at varying rates, and are individually rolled over to 0.

- RGBDecrement - the red, green and blue channels of each pixel are decremented at varying rates, and are individually rolled up to 1.

- Feedback Speed

- A value between 2 and 5 is used to select the speed from a list of pre-calculated frequencies, which are pulled from the videos using Fourier transform analysis. This mathematical function finds the dominant frequencies in a signal. Videos with gentle motion will have a lower set of frequency values than videos with strong currents.

- Colour Mode

- Colour Mode translates the pixels in the feedback system into the final output you see, performing various combinations or translations of the base RGB values in the feedback system.

- Video Speed

- Either half or full, and changes the rate at which the underlying video is played back at.

- Video Id

- The index of the video which your piece samples from.

Information on the filming location, weather and time of day for video can be obtained by calling getVideoInfo(tokenId) on the contract. In the case a video file is lost, the artist authorizes the collector to re-create the video using the parameters in the video info file.